- #DOWNLOAD SPARK WITH HADOOP INSTALL#

- #DOWNLOAD SPARK WITH HADOOP UPDATE#

- #DOWNLOAD SPARK WITH HADOOP DRIVER#

Rdd_profit: .RDD = /pig_analytics/Profit_Q1.txt MapPartitionsRDD at textFile at : 24 Scala> val rdd_profit = sc.textFile( "/pig_analytics/Profit_Q1.txt") # $SPARK_HOME/bin/spark-shell -master yarn The sample file for this will be a data file in HDFS we have used during our PIG installation and basics tutorial. We will run a map-reduce program using spark. So for that we will create a HDFS directory to park the output file. # vi ~/.bashrcĪll set, so lets login to our spark shell and run an interactive map-reduce program in SPARK. # cd # scp -r /usr/local/Īfter that we will set the environment variables in the EdgeNode accordingly. Logon to the EdgeNode & secure copy the Spark directory from the NameNode. Let us configure of EdgeNode or Client Node to access Spark. Also the Spark Application UI is available at. In our case we have setup the NameNode as Spark Master Node.

# jps 5721 NameNodeīrowse the Spark Master UI for details about the cluster resources (like CPU, memory), worker nodes, running application, segments, etc.

Let us validate the services running in NameNode as well as in the DataNodes. So it's time to start the SPARK services. Well we are done with the installation & configuration. Repeat the above step for all the other DataNodes.

#DOWNLOAD SPARK WITH HADOOP UPDATE#

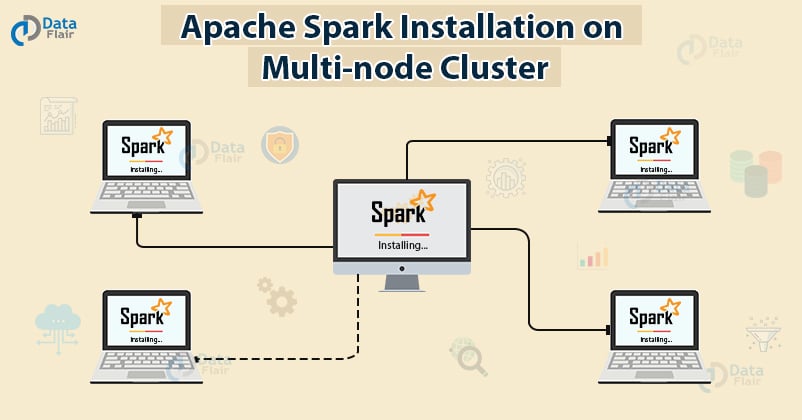

Next we need to update the Environment configuration of Spark in all the DataNodes. # cd # scp -r spark # scp -r spark DataNode2:/usr/local We will secure copy the spark directory with the binaries and configuration files from the NameNode to the DataNodes. Now we have to configure our DataNodes to act as Slave Servers. Open the slaves file & add the datanode hostnames. Next we have to list down DataNodes which will act as the Slave server. # cd # cp spark-env.sh.template spark-env.sh # vi spark-env.shĮxport JAVA_HOME=/usr/lib/jvm/java -7-oracle/jreĮxport HADOOP_CONF_DIR=/usr/local/hadoop/etc/hadoop

Copy the template file and then open spark-env.sh file and append the lines to the file. Next we need to configure Spark environment script in order to set the Java Home & Hadoop Configuration Directory. Append below lines to the file and source the environment file.

#DOWNLOAD SPARK WITH HADOOP INSTALL#

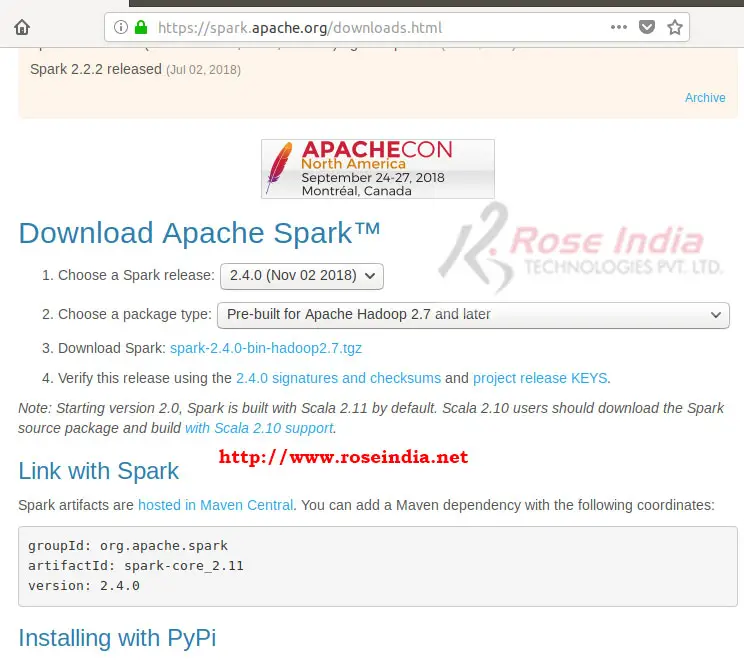

We will install Spark under /usr/local/ directory. In the time of writing this article, Spark 2.0.0 is the latest stable version. Select the Hadoop Version Compatible Spark Stable Release from the below link Lets ssh login to our NameNode & start the Spark installation. We will configure our cluster to host the Spark Master Server in our NameNode & Slaves in our DataNodes.

#DOWNLOAD SPARK WITH HADOOP DRIVER#

There are two ways to create RDDs- parallelizing an existing collection in your driver program, or referencing a dataset in Hadoop InputFormat like HDFS, HBase. Spark resilient distributed dataset (RDD), which is a fault-tolerant collection of elements that can be operated on in parallel. Spark powers a rich set of stack of libraries/higher-level tools including Spark SQL for SQL and structured data processing, MLlib for machine learning, GraphX for graph processing, and Spark Streaming for streaming data in order to perform any complex analytics. Hence applications can be written very quickly using any of these languages. SPARK provides high-level APIs in Java, Scala, Python and R, and an optimized engine that supports general execution graphs.

0 kommentar(er)

0 kommentar(er)